-- Greg Armstrong --

Last Update: March 7, 2023

The University of Wisconsin’s excellent online interactive Logic Model training is a valuable, easy to use introduction to RBM – and it is free.

Level of Difficulty: Easy to moderate

Primarily Useful as: An introduction to RBM and Logic Models

Length: 3-5 hours online, 216 page downloadable PDF, and an 86-page teaching and training guide

Limitations: No longer audio, but individual components are available for download

Who this is for

Like other consultants and trainers who work on international development issues, I usually work with groups, either donor or implementing agencies, training 10-200 people on RBM. This reduces the cost (to them) of the training time, travel and other expenses. But readers of this site or the RBM Training website sometimes ask if I can provide training on results based management for individuals. This would, however, be so expensive that it would not be practical. Other options such as enrolling in university courses in Europe or Canada might work, but often require more time, and again, more money, than an individual might be prepared to invest.

But there is online, an excellent resource available to introduce RBM to anyone who has no experience with RBM – or, for that matter, to refresh even the most jaded RBM practitioner’s interest. This is the University of Wisconsin Extension Department’s Enhancing Program Performance with Logic Models. Developed in 2002, originally put online in 2003, and revised in 2022 there is very little in this easy to use and interactive course, that is not still relevant today, in 2023 to those who are trying to understand how to use results-based management.

This course is one of 11 offered free by the Program Planning and Evaluation Division of the University of Wisconsin's Extension Department.

|

| Program Planning and Evaluation courses offered by the Program Planning and Evaluation Division, University of Wisconsin Extension Department |

Format: Interactive Online learning and a number of downloadable components

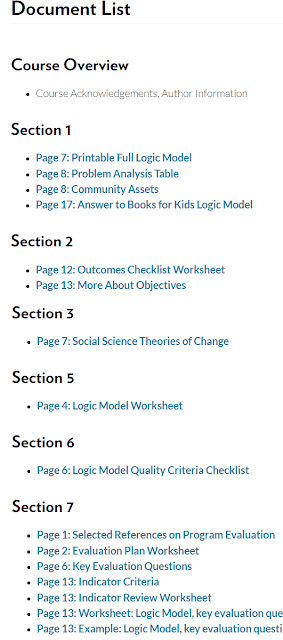

When I first saw this course in 2011 it had both an interactive online format, and for those who did not want to, or could not use the internet for the 2-5 hours completion of the course takes, a PDF version of the course. The real charm of this course, however, is that it is interactive, and it is the engaging nature of the interaction that cannot be replicated in the downloadable PDF. The downloadable PDF version is still available, and the course itself has a number of useful additions including the boring sounding but very useful Document List, which has a 16 different downloadable documents including very practical worksheets and background information on social science issues.

|

| Downloadable components of the University of Wisconsin Online Logic Model Course |

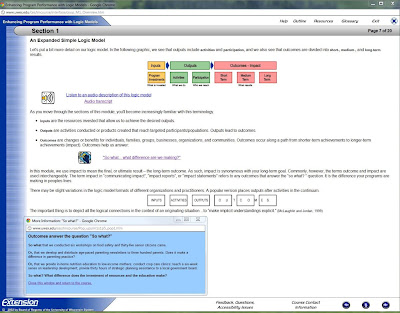

Presentations, in the course’s 7 sections, both audio and text, are supplemented with pop-up windows which participants can view, if they want additional examples or references, followed with exercises and then feedback on the answers.

|

| University of Wisconsin Extension Online Logic Model Training |

It should not be surprising that the interactive elements are as engaging as I found them to be, because this site was developed by a team of content and technical experts,at the Extension Department of the University of Wisconsin – and extension departments are almost always the group in any university the most skilled in tailoring learning events to learners’ needs and learning styles.

Each of the course’s 7 sections contains tthe following elements

- Section Overview

- Section Goals

- Section Outline with clickable links to the components of the section

- Content presentation

- Activities that require us to put into practice the theory on the preceding pages.

Some of the sections also contain a section on the research or public administration background to the topic, and a bibliography.

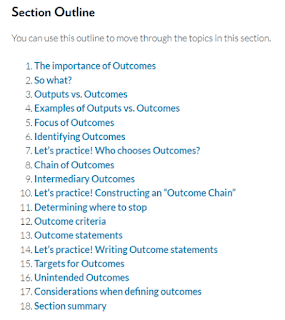

Here is what the Section Outline typically looks like, using Section which focuses on defining Outcomes:

|

| 18 Clickable Topics in Section 2 on Defining Outcomes |

Course Contents: Reinforcing important lessons about RBM

The course title is Enhancing Program Performance with Logic Models, but it deals with all of the associated elements of results-based management, not just the Logic Model. Nothing in it will be a major surprise to people who work regularly with RBM, but the what this course teaches users about results-based management is worth repeating, simply because these lessons are often neglected or ignored, by even the most experienced users of RBM.

The course has 7 content sections, each of which generally takes between 20-40 minutes to complete.

What is a Logic Model?

Section 1 discusses the difference between Outputs and Outcomes, the need to test assumptions, and identify risk. This section covers 20 screens in the course, and runs from pages 7-58 in the downloadable PDF. The difference is that in the PDF, transcripts of the audio from the online course, and a number of worksheets, are included as text.

This introduction to Logic Models includes, among much else, an interesting interactive Logic Model puzzle, requiring us to test our understanding of sequence, timing, risk and results, placing 20 different statement about a programme into one of 8 categories such as resources, activities, participants, Outputs, Outcomes, assumptions and external factors (or risk).

|

| University of Wisconsin Logic Model course - interactive puzzle Copyright: 2002, Board of Regents, University of Wisconsin CLICK image to enlarge |

This is not dissimilar to what you can do in a group with paper and scissors, but nevertheless engaging if you are working alone, and trying to ensure you understand the ideas of sequence, scale, change and other factors.

More about Outcomes

Section 2 takes us in detail through the different types of legitimate results that we can see in at individual, group, agency, system, or community levels, and talks about why participation of stakeholders in defining Outcomes is important.

This section includes material about RBM that more than one of the UN development agencies could usefully review. Some of these agencies – not all, but some important UN agencies - get hung up on completing activities, (Outputs) and never seem to move on, in practice, to assessing whether these lead to any real change, or result (Outcomes).

Section two uses19 screens in the online course, and 32 pages in the PDF, with all of the associated supplementary material which is found in the pop-ups and links, in the online course.

More About Program Logic

Section 3 introduces (or reintroduces to forgetful RBM cognoscenti) the concept of clarifying the logic and assumptions implied in designing activities with the intention of contributing to a result. It covers theories of change, the complexity of real-life programme logic, results chains – what it calls Outcome Chains - and whether it is reasonable to claim causality – part of the current discussion on attribution of results to programme or project interventions.

It is important to note here, for those sometimes justifiably cynical about RBM who focus on complexity, that this course itself makes the point that multiple, often unplanned or unforeseen factors can affect results, not just the programme interventions. It also discusses the idea that a simple, linear logic model may not reveal enough of the factors involved in achieving results, and why more complex logic models may be needed to underpin the simple ones that we are often forced to submit to funding agencies.

Another interactive exercise here, helps us to think through the theory of action behind a programme intervention, and despite the fact that this section has only 9 screens in the online course, and 24 pages in the PDF, the complexity of the interactive exercise on page 7 means it can take well 30 or 40 minutes to finish this section.

|

| University of Wisconsin online Logic Model course, interactive exercise testing theories of action Copyright: 2002, Board of Regents, University of Wisconsin CLICK image to enlarge |

This exercise is also an example of why, if you can, it is more productive in terms of learning to go to the online course first, before using the PDF, because with the PDF material there is essentially no chance to test your own understanding of the processes, before you see the “answer”.

What does a Logic Model Look Like?

Section 4 discusses why logic models, depending on the situation, purpose and culture involved, can take many different forms. There is no single format for Logic Models that suits all needs when the intellectual process of testing theories of change is involved – even though there may be, for any given donor, only one format they want to see. There are, as the course points out, situations where Logic Models may differ in size and complexity, depending on whether they are being used to test ideas for programme planning, evaluation, communication or for programme and project managers, the core of implementation activities.

Once again, note to complexity theorists, the course makes the point that logic models need not be simplistic, linear creations, but can be useful in helping agencies and individuals understand the complexity of the systems involved in interventions.

This section takes 8 screens in the online course and there are several downloadable examples and worksheets.

How do I draw a Logic Model?

Section 5 is perhaps the most important of the course because it focuses on the real need to develop logic models as a group, not as an individual.

“As you work through this section, you will appreciate that the best way to construct a logic model is with others. While it may be quicker and easier to work alone, try not to. Many people believe that the real value of logic modeling is the PROCESS of creating one and the understanding and consensus that you build about a program as a result.”[Section 5, screen 1, PDF: p. 127]

I can only say I agree completely. In the Results Based Management training sessions I do with donor agencies, implementing organizations and national partners, feedback suggests that 80-90% of participants were absorbed by the Logic Model development process, sometimes surprised at how often the discussions reveal previously unknown or unacknowledged differences in perception among close colleagues, about what the original problem is, what risks and assumptions they have, and what reasonable results could look like.

5 different approaches to Logic Model development

All of the approaches focus on Logic Model development a group. The first four approaches are intended for planning new programmes. These can be found at the online course, by clicking on the links at the bottom of the screen on creating a logic model for a new programme or on page 136 of the PDF. All of these approaches begin with the result, and move back to activities and necessary resources.

- Start with the Long-term result or Outcome, and move back through mid-term or intermediate results, to short-term results, then to the Outputs needed to achieve these, then back again to activities and finally to inputs.

- Start with the long-term desired result, but then move immediately to activities, which is often the primary interest of participants, and then testing whether these will in fact, contribute to short of mid-term results which can logically relate to the long-term result.

- Start with the Long-term result, then brainstorm all of the elements that will affect this – activities, short, mid-term results, participants, risks, then sort them out to see if participants agree on the relationships and sequence.

- Juxtapose the situation or problem with a long-term desired change or Outcome then move back through mid-term and short term results necessary to get there, and finally to participants, activities and resources required. This looks similar to the first option, but in fact really does, in practice in groups force those with preconceptions about what the activities should be, to confront the problem clearly, to look for results and then decide what activities and resources are needed.

- A fifth approach is also listed separately, on the next screen of the course, and this is for a situation where it is necessary to start logic model development with existing resources and existing activities.

I have seen this happen where there is a second phase to a project, or where there is just too much institutional inertia to reconceptualize how to approach a problem. It is essentially an approach focusing, as I see it, on a search for results to justify what is already being done – or as the this course suggests, where an “off the shelf” programme already exists: Ask about an existing programme why each activity exists, what possible changes it can lead to, and how this can relate to a newly identified problem.

This approach is sometimes necessary, in my experience, when working on RBM with universities, where activities such as degree course work and research are accepted as the core of university activities, and therefore the starting point for interventions. It can also be necessary with some government agencies which also sometimes see every problem through the paradigm of their own mandate and existing expertise.

Moving such institutions towards a genuine questioning of what is really likely to achieve results is sometimes quite difficult.

How Good is my Logic Model?

Section 6 reviews some of the pitfalls individuals or RBM workshop facilitators may encounter in the development of Logic Models, including:

- Getting lost in the RBM terminology,

- Focusing too much on the mechanical aspects of putting activities and results in boxes, without assessing the plausibility of the connections between activities and results,

- Focusing on – or complaining about – linearity, rather than exploring the complexities that some Logic Model formats can reveal,

- Confusing the development of a Logic Model with evaluation, (for which it can indeed be a useful tool, but to which its utility is not limited)

- Perceiving the Logic Model as a panacea for programme or project design or implementation problems, rather than as a tool to help us find possible solutions to such problems,

- Focusing on production of the paper product, but never using it in practice.

It also makes the point that different people and agencies can use – or require the use of – Logic Models, for different purposes, and that we need to be clear about what this purpose is when we determine in how much detail we will work on Logic Model development.

This section spans 10 screens in the online programme, and 16 pages in the PDF version.

Using Logic Models in Evaluation - Indicators and Measures

Section 7 reviews how understanding the original problem, assumptions, risk and the intended logic of the results chain can help agencies determine what evaluation questions to ask during different types of evaluations – questions about, and indicators relevant to:

- The quality of inputs and completed activities (Outputs),

- Who is participating, and what is the reach of the activities,

- Assumptions underlying programme design, and selection of activities,

- Whether results are actually achieved, and to what extent they can be reasonably attributed to the intervention, and

- External factors –including what many agencies refer to as risk - on the achievement of results, or the failure to achieve results.

Examination of a Logic Model and how it is formed can provide the foundation for

- Needs assessments (assessing the original problem, and what can be done about it),

- Process evaluations (assessment of inputs and the quality of activities)

- Outcome and Impact evaluations (whether changes occurred and to what extent programme or project activities, and also external factors, may have contributed to such change).

Section 7 is the longest of the course, with 20 online screens and 49 pages in the downloadable PDF. The indicator discussions run from screens 9-20 in section 7, and pages 178-205 in the PDF.

Glossaries and references on logic model development, RBM and evaluation

The course, formally ends with section 7, but it is worth taking a look at other resources included:

- The useful glossary of terms related to results-based management, evaluation, data collection and data analysis. Plain language definitons for 61 terms are provided, and this can be accessed on every page of the online course from the upper right side of each screen, or from the PDF on pages 207-211.

- The bibliography of references related to results based management and evaluation can also be reached by clicking on “resources” at the top of each screen or on pages 212-216 of the PDF. The bibliography includes 72 references, 8 of which have clickable links that still appear to be functional. Most of the 72 articles or references were written between 1994-2003, but it is worthwhile in particular visiting the Centers for Disease Control evaluation resources page which has a lot of very useful, accessible, and in some cases more current, guides on evaluation, logic models and data collection. The link in the bibliography is not current, but the page will automatically redirect to the new location, which I have provided in the previous sentence.

- 11 additional links on evaluation issues such as questionnaire design, surveys, focus groups, quasi-experimental design and other issues can be found throughout section 7 online, or on page 199 of the PDF.

- Finally, the course also provides links to 15 downloadable logic model worksheets or hints, in PDF and sometimes Microsoft Word format, also under “resources” at the top of each page. These can also be found scattered throughout the PDF document.

Limitation

Because this course was developed in 2002 and redeveloped in 2022, most people will have computers capable of making use of the interactive elements of the site – but there is one potential limitation to this with some of the potential users in Africa, Asia or Latin America. A reliable internet connection is needed to use the site, and in many of the places I, and my colleagues have work such connections can be, at times, unreliable. You might, for example, have a half hour of access to read things like this blog, but it would be frustrating to be interrupted in the middle of this online course.

Alternative provided: Users can download the 216 PDF version of the course, which includes the text of the whole course.

For those who are interested in training of trainers on RBM, the the Teaching and Training Guide is also useful, providing templates, schedules and outlines for activities.

The bottom line: This is an excellent introduction to results based management, focusing on logic model development, and its design is a credit to its authors' adult education abilities. It does not pretend to replace group workshops but it provides an intelligent, practical, and easy to use walk-through of the main issues in RBM.

_____________________________________________________________